| AI in Clinical Medicine, ISSN 2819-7437 online, Open Access |

| Article copyright, the authors; Journal compilation copyright, AI Clin Med and Elmer Press Inc |

| Journal website https://aicm.elmerpub.com |

Editorial

Volume 1, 2025, e7

Future Writing: Rethinking Audience, Ethics, and Purpose With Artificial Intelligence Benchmarks That Uplift Humanity Like Ending the Organ Shortage

Kim Soleza, c , Habba Mahala, Deborah Jo Levineb

aDepartment of Laboratory Medicine and Pathology, University of Alberta, Edmonton, Alberta, Canada

bStanford Lung Transplant Program, Stanford University School of Medicine, Stanford, CA, USA

cCorresponding Author: Kim Solez, Department of Laboratory Medicine and Pathology, University of Alberta, Edmonton, Alberta T6G 2A9, Canada

Manuscript submitted August 19, 2025, accepted October 14, 2024, published online November 29, 2025

Short title: Future Writing: AI Ending the Organ Shortage

doi: https://doi.org/10.14740/aicm7

- AI Optimism Finds Validation in 2024 Bostrom Deep Utopia Book and Subramanian Article With 50 Authors

- There Is No Rationale for Humans Sitting Around and Talking, Waiting for AI to Do the Planning

- Why Value Alignment of Nanotechnology is Also Crucial Just Like in AI

- When Faced With a Choice Between Two Desirable Goals, Choose Both. The Future Will Not Be a World of Winners and Losers, but Rather Winners and Winners

- References

It has been estimated that over 74% of the best new nonmedical writing in 2025 is artificial intelligence (AI)-generated [1], and so even in medical writing, we need to consider how to communicate back to AI, the future sentient machine audience, as one function of our communications. We need to consider AI as an important part of the potential audience for anything that we write. Eventually AI agents may be the main audience for our better writing. Our more cerebral writing will be better understood by sentient AI than by humans and may be more actionable by sentient AI than by humans. Eventually we will reach the point where our best and most beautiful writing is understood only by sentient AI machines. That may be already true to a greater extent than we know. As the first author said in this 2024 editorial [2], there will increasingly be “an eventual substantial nonhuman audience” for everything that we write. From this point onward, we need to always be thinking about that second audience as we write. Initially we would have one version optimized for both human and machine audiences, but eventually there could be two separate versions.

If we were to rewrite medical papers for an AI audience, what would that look like? Content can be optimized for AI readability by including structural clarity, plain language, defined terms, and metadata [3]. We also think that it would be shortened to about one-third the length owing to Rich Sutton’s “bitter lesson” [4] considerations which would lead to many common elements and spillover which would lead to discussion of the most powerful computer algorithms which would speed things along in all areas. Much of the rich historical background of human studies that led to the state that we are in today would not be necessary for an AI audience. The references could be reduced to a third the present number. The two versions may not overlap very much. The original paper for a human audience deals with how we got to where we are and how AI would proceed if everything remained siloed and sub-specialized and human driven. However, in the age of artificial general intelligence (AGI) and super-intelligence, the siloed and sub-specialized approach would be way too slow and so the version for the AI sentient machine audience would deal with the common elements, spillover, and accelerating algorithms allowing much more rapid progress [5]. Recently Rich Sutton suggested [6] that while the bitter lesson has dominated the past 7 years, it need not dominate the next 7 years which could have a more uplifting theme. We would suggest a “sweet lesson” for the next 7 years describing how well human flourishing and the future of humanity could proceed with AI in charge.

Needing a different style and format of writing for AI does not depend on the AI being conscious, which does not mean that it will not soon be conscious. It well may become conscious. This recent video [7] says that AI is already conscious, and at minute 7:55 Nick Bostrom says that this is the biggest event in human history, but few details are given. We await the details.

Our 2024 editorial [2] ends with this futuristic thought: “So, here we have a story with a happy ending, but probably not the one you were expecting, and with an eventual substantial nonhuman audience.” In February 2025, the first author shared the editorial with Michael Levin who has been called “the most important living scientist” [8]. Later that month, Michael Levin recorded a video that expanded on this idea of writing for a nonhuman (sentient machine) future augmented being audience: [9] minute 3:09.

“There is also a focus in this idea that some of what we write is not really for us, but for the future augmented beings whether they be humans, cyborgs, AI’s whatever it is going to be. Eventually they will also have their own problems because they will also write more than even they can read.”

That was recorded on February 21, 2025, at a time when we were interacting with Levin very actively about World Transplant Congress abstracts. It is amusing and memorable to see how he expanded on our idea. In the future, we will be thinking about that kind of elite nonhuman secondary audience very often as we write. This perspective makes our original editorial seem more important as the start of this line of reasoning.

On February 23, 2025, Gibberlink, a new auditory protocol for phone-based AI-to-AI communication, was described in a video posted to YouTube [10]. This reinforces the idea that for written communications too there could eventually be different modes of communication for AI-to-AI communication. Nobel Laureate Geoff Hinton often called “the Godfather of AI” has suggested that within 2 years AI-to-AI communications could replace many human-to-human communications [11].

Recently major advances were achieved in Grok 4 [12] and GPT 5 [13]; it feels very much like AGI is upon us. Our colleague Rich Sutton recently winning the Nobel-equivalent Turing Award [14] “amplifies” everything that has happened here since his “bitter lesson” is a big factor behind Grok 4’s success [5] and the very fast pace of AI advances right now in general. We are very much into AI optimism in nephrology and renal pathology [15, 16].

| AI Optimism Finds Validation in 2024 Bostrom Deep Utopia Book and Subramanian Article With 50 Authors | ▴Top |

This AI optimism finds validation in Nick Bostrom’s 2024 book “Deep Utopia: Life and Meaning in a Solved World” [16]. The book explores how humanity might find meaning, purpose, and identity in a future “solved world” made possible by advanced AI and technology, a concept that moves beyond economic labor to all forms of human endeavor.

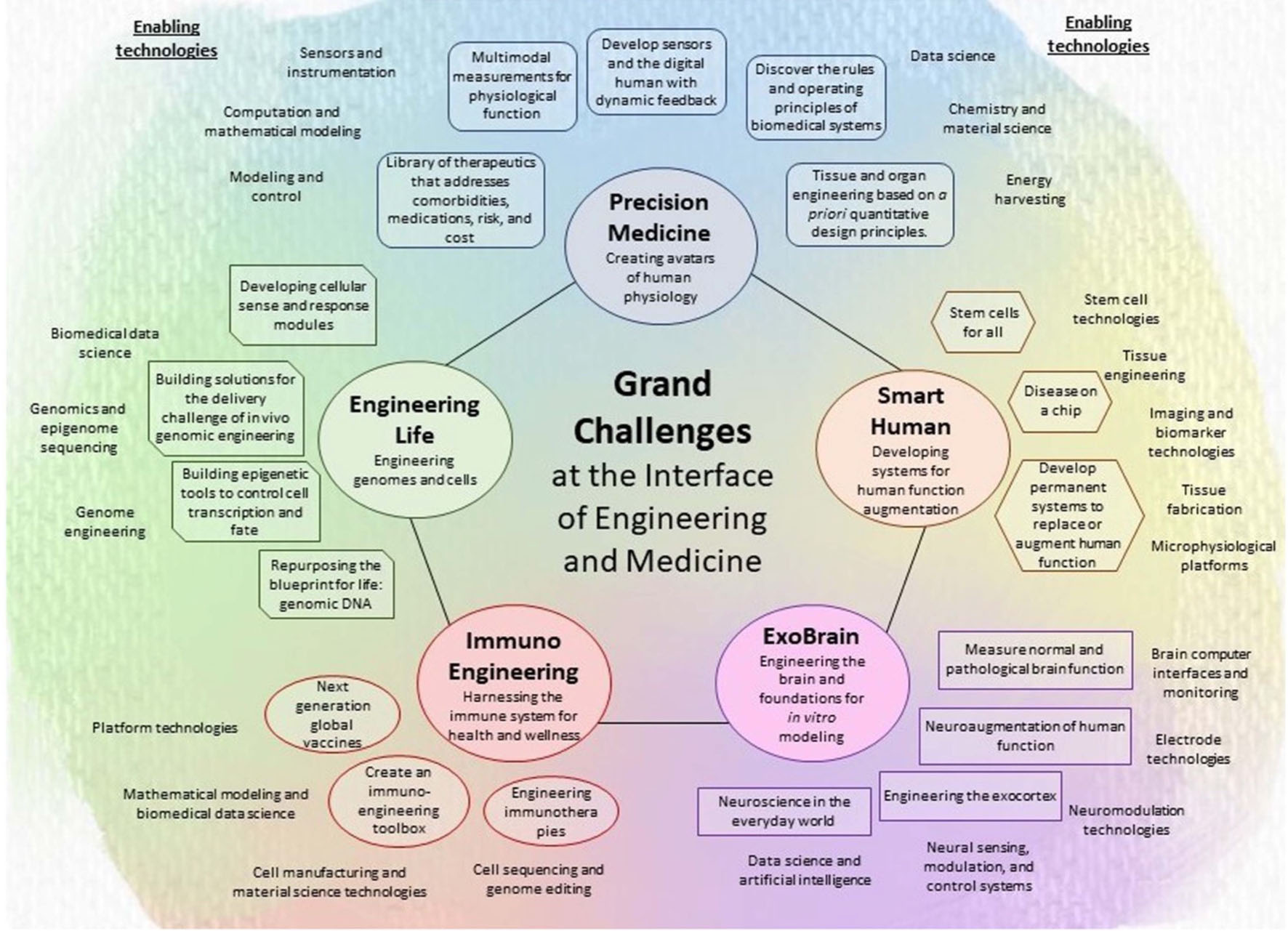

Even though it seems like that almost everyone is talking about and using AI, and the new US AI policy is described as the “broadest industrial strategy since Eisenhower” [17], and that policy calls the AI race bigger than the space race [18], many of our AI-related experiences have been strangely lonely because of the lack of competition and the feeling that no one else is there on the playing field. In a surprising recent development, a 2024 article appeared by Subramaniam et al with 50 authors [19] and talking about the 24 big challenges of humanity that can be addressed by engineering and medicine, very similar to our 2022 description of the 24 big challenges of humanity that AI can help us fix, see below. So now there are two entities in the playing field expressing AI optimism and we expect more. Figure 1 is a graphic from that paper.

Click for large image | Figure 1. The 24 grand challenges at the interface of engineering and medicine from Ref. [19]. |

We first presented our list of the 24 big challenges of humanity that AI could help fix on June 17, 2022 [20] viewed by 900 people with 20 thumbs up but since then, except for [19], there has been no competing lists presented, no pushback on our list, and one general review [21] of the presentation, but no suggestions regarding changes to the list. Similarly, our presentation on AI-generated regenerative medicine solutions for composite tissue grafts on September 18, 2024, at the Paris Banff Transplant Pathology Meeting [22], mentions that it seems that there is no one else working or commenting on this field, and no one else has emerged in the field since then.

As other technical benchmarks are increasingly saturated and become 100% reached, we need more meaningful real-world targets that will uplift humanity (minute 41:30) [23]. In medicine, there is no better target than ending the organ shortage by creating complex organs with stem cells. If we succeeded at that goal, that would save one million lives a year [24].

It is not that we would be choosing between either xenotransplantation or regenerative medicine, the two could be combined in various ways, both using pig organ scaffolds.

It seems natural to complain that “It’s way too complicated to ever come up with a single metric for AI to focus on improving” but that is only the case if one reasons by analogy and considers all the myriads of approaches being taken in the field. If one reasons from first principles and makes one logical assumption, one can quickly identify one metric that AI can focus on to solve the problem [25]. That is where the rarified playing field comes from. Perhaps no one else is doing that.

We believe that AGI will relatively easily be able to get the right human cells in the right places in decellularized/recellularized kidney scaffolds, except for the long loops of Henle, so successfully adding cells back to that structure then becomes the metric to follow to measure success. Functionally this would correlate with ability to concentrate the urine. In the beginning, we would need histological confirmation of integrity of the long loops of Henle, but eventually demonstration of concentrating ability would be sufficient to prove that the long loops are there and functioning.

| There Is No Rationale for Humans Sitting Around and Talking, Waiting for AI to Do the Planning | ▴Top |

Once one understands that and sees how this approach applies to all 24 big challenges of humanity, there is no rationale for sitting around and talking, waiting for AI to do the planning. We humans should establish planning priorities now without delay. Set the right tone and direction for the next era of combined human and AI success in every area. Carve out what autonomy, agency, and direction that we can while that window is still open for us. Time for humanity (or perhaps at least a small number of humans) to lead and set direction while we still can. Be the human vanguard. The story of the new Prometheus could be our biggest success. “Make no small plans.”

And what about morality/ethics? The obvious fact about AI alignment and morality/ethics that no one mentions, but which logically follows this Technology and Future of Medicine course LABMP 590 discussion from 2012 [26] is that aligning with human values may be impractical because human thinking about alignment and ethics is too limited. AI can easily become better at ethics than we are and can naturally evolve a superior ethics to our own. This is an ethics which will appeal to us humans more than our own would and which we can see is superior to our ethical thinking. In that sense, we can “lose control” and enjoy that process of losing control to make an obviously more just world and benefit from it! We understand that mean-spirited zero-sum thinking is the natural tendency for some people, but this approach will not appeal to or be logical for smarter-than-human machines that are much more even handed and see a rapidly expanding world of abundance. It is also encouraging that kindness and ethical sense have been shown to be correlated with IQ in young people, and similar effects are seen in AI as it gets smarter [27] (Good summary in last 2 min). Here is the latest article that we know of on AI moral status [28]. It is not unreasonable to think that as AI becomes more intelligent than humans that it might develop superior ethics, which even humans find an improvement over human ethics.

Alignment dangers also exist for nanotechnology and it is interesting to compare those with those for AI [29].

| Why Value Alignment of Nanotechnology is Also Crucial Just Like in AI | ▴Top |

1) Benefit to humanity: Nanotechnology has the potential to significantly improve human well-being, from energy storage to medicine.

2) Ethical governance: Value alignment is essential for responsible governance, ensuring that the development and deployment of new technologies respect ethical principles.

3) Sustainable futures: By aligning nanotechnology with broader goals like the sustainable development goals (SDGs), it can be a powerful tool for achieving progress toward a more sustainable and equitable world.

4) Preventing harm: Just as with AI, nanotechnology needs to be aligned to prevent unintended negative consequences and to ensure that it serves its intended purpose without causing harm.

We expect that AI will create a world optimized for humans much better than humans could make as Bostom [16] and Subramanian et al [19] suggest. In return, we can try to anticipate what kind of writing sentient machines will like and create that kind of writing. Writing for an amazingly positive AI future for humanity. This can be our new experience, part of the great learning from experience David Silver and Rich Sutton talk about in this latest article [30]. It does not get better than that.

A third major consideration is the fear that AI creativity will run roughshod over human creativity [31]. How can we keep some human creative utterances secret, at least for now, so they are not part of AI training sets? We happened upon an instance of keeping human creations away from AI when one of Qing Yin Wang’s drawings was accidentally kept out of collections of her work [32]. The first author then rediscovered it in a video years later. So how does it compare with the other images that we distributed far and wide? The specific ideas that the drawing depicts have not been discussed again since its first display we think. The secrets have been kept up until now.

No matter how advanced AI becomes, there will always be ways for humans to still create writing and things of value out of the reaches of AI and then only share it with AI when they are ready to do so. The ability to do that will give us the confidence to be ourselves. We need to retain that confidence in our humanness.

| When Faced With a Choice Between Two Desirable Goals, Choose Both. The Future Will Not Be a World of Winners and Losers, but Rather Winners and Winners | ▴Top |

It is not all either or. Just as above we mention combining xenotransplantation and regenerative medicine, we can combine human and AI efforts to improve writing. Rather than just throwing up our hands and saying we cannot obtain feedback on our writing from more than a few hundred readers [33] (minute 20), AI can assist us in finding ways to provide much more granular and continuous feedback from much larger numbers of readers. In future books feedback mechanisms can be built right into books for every page or paragraph every time we encounter them. That would overwhelm human authors, but AI could provide useful summaries of this voluminous feedback.

On the other hand, let us acknowledge that we are never going to end the organ shortage without AI’s intervention. Xenotransplantation has limitations, requiring potent immunosuppression and extensive gene edits in the pigs used, while also subjecting patients and their contacts to zoonosis risks [34, 35]. Regenerative medicine also has limitations, it will not be possible to create stem cell generated complex organs without the intervention of AI [36], but the two artfully combined by AI and a bit of human help can create an almost perfect situation for ending the organ shortage, with mixing and matching of pig xenotransplants and human cell tissue engineering constructs in pig kidney scaffolds. This will all occur quicker than one might think. Join us in embracing that possibility and many other similar AI solutions to the major challenges in human health.

In a world in which we have eliminated the organ shortage not only would one million lives a year be saved, but the whole enterprise of transplantation would be enlarged/multiplied 20-fold, funding, workforce, number of supporting companies, and number of transplant recipients with functioning grafts. It is perhaps the most impactful positive change in clinical medicine that one can imagine [24, 34].

Acknowledgments

No artificial intelligence was used in the writing of this article.

Financial Disclosure

No funding was received for this article.

Conflict of Interest

None of the authors has a conflict of interest related to this article.

Author Contributions

All three authors made: 1) substantial contributions to the conception or design of the work; or the acquisition, analysis, or interpretation of data for the work; 2) drafting the work or revising it critically for important intellectual content; 3) final approval of the version to be published; and 4) agreement to be accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. Kim Solez and Habba Mahal were involved in the conception or design of the work and practical drafting of the work and revising it critically for important intellectual content. Deborah Jo Levine was involved in the conception and design of the work and strategic meta-level drafting of the work and revising it critically for important intellectual content.

Data Availability

Any inquiries regarding supporting data availability of this study should be directed to the corresponding author.

| References | ▴Top |

- Soulo RL Xibeijia Guan, Tim. SEO Blog by Ahrefs. 2025 [cited Sep 27, 2025]. 74% of new webpages include AI content (Study of 900k Pages). Available from: https://ahrefs.com/blog/what-percentage-of-new-content-is-ai-generated/.

- Solez K. Prognostication from kidney transplant biopsies: 90 years of dynamic alien landscape forward and back. Kidney360. 2024;5(9):1232-1234.

doi pubmed - Nava PBC [Internet]. [cited Sep 27, 2025]. How to make your content AI readable. Available from: https://www.navapbc.com/toolkits/readable-ai-content.

- Ahad Jawaid The Bitter Lesson - Richard Sutton, - YouTube. https://www.youtube.com/watch?v=rcKd5KhPEVo. Accessed Aug 17, 2025.

- All-In Podcast. The Bitter Lesson: Grok 4's breakthrough and how Elon leapfrogged the competition in AI- YouTube. https://www.youtube.com/shorts/2CWNyRGgZxA?si=Z4-a_WlSjUaFS6ly. Accessed Aug 17, 2025.

- Rich Sutton. Father of RL thinks LLMs are a dead end. Minute 50:55 - youtube [Internet]. [cited Oct 9, 2025]. Available from: https://youtu.be/21EYKqUsPfg?si=Zh-bQgQcG8Dx1kQT&t=3055.

- Click Future. AI passed the consciousness test. - YouTube. https://www.youtube.com/watch?v=I7v5qUByT8M. Accessed Aug 17, 2025.

- Max Depth. The most important living scientist - Dr. Michael Levin - YouTube. https://www.youtube.com/watch?v=3B2wEWf2zFQ. Accessed Aug 17, 2025.

- Pari Center. The future world - a conversation between Michael Levin and Alex Gomez-Marin. https://www.youtube.com/watch?v=uWT3dMZrqw0&t=189s. Accessed Aug 17, 2025.

- Gibberlink. In: Wikipedia [Internet]. 2025 [cited Oct 9, 2025]. Available from: https://en.wikipedia.org/w/index.php?title=Gibberlink&oldid=1315829337.

- Geoffrey Hinton, In 2 years, AI will replace human conversation with AI-to AI, - youtube [Internet]. [cited Oct 9, 2025]. Available from: https://www.youtube.com/watch?v=01iFaPC9PLo.

- Lambert Nathan. xAI’s Grok 4: the tension of frontier performance with a side of elon favoritism. https://www.interconnects.ai/p/grok-4-an-o3-look-alike-in-search. Accessed Aug 17, 2025.

- Introducing GPT-5. Accessed Aug 7, 2025. https://openai.com/index/introducing-gpt-5/.

- MacPherson Adrianna. Computing Science Professor Wins “Nobel Prize in Computing”. https://www.ualberta.ca/en/folio/2025/03/computing-science-professor-wins-turing-award.html. Accessed Aug 17, 2025.

- Kim Solez. AI Optimism in nephrology, nephrology grand rounds university of Alberta. March 21, 2025. https://youtu.be/7WbuqB_otdc. Accessed Aug 17, 2025.

- Kim Solez. AI optimism in Nephrology &Path JustMachines Inc. Banff Digital Pathology Working Group. June 5, 2025. Accessed Aug 17, 2025. https://www.youtube.com/watch?v=uWBzfNqh9lY.

- Peter Diamandis. America’s AI Plan, Moonshots. https://www.youtube.com/shorts/ddBDpCgBtuw. Accessed Aug 17, 2025.

- David Sacks. AI Race Bigger that Space Race. https://www.youtube.com/shorts/JM7ibS2e22A. Accessed Aug 17, 2025.

- Subramaniam S, Akay M, Anastasio MA, Bailey V, Boas D, Bonato P, Chilkoti A, et al. Grand challenges at the interface of engineering and medicine. IEEE Open J Eng Med Biol. 2024;5:1-13.

doi pubmed - Kim Solez. Kim Solez Counters Eliezer Yudkowsky's AGI Ruin List of Lethalities with AI Utopia Thoughts, Jun 17, 2022. https://youtu.be/9m5kWBayClU?si=Mx3Qu3b6cW0UTi6A&t=462. Accessed Aug 17, 2025.

- ‘Where Are the AI Utopians?’ Paperblog, . Accessed Aug 17, 2025.

- Kim Solez. Kim Solez Application of Regenerative Medicine to Vascularized Composite Allografts. Sep. 18, 2024. https://youtu.be/LVLDfiog7RU. Accessed Aug 17, 2025.

- Peter Diamandis. AI Benchmarks that uplift humanity. Moonshots. https://youtu.be/mn08ZRwx9r4?si=GAhwNUh0Mb8ewh0E&t=2486. Accessed Aug 17, 2025.

- Kim Solez. Kim Solez Ethics of Regenerative Medicine in an AI Enhanced Future June 1, 2024. Am. Transplant Cong. https://youtu.be/wEPpu2GWMb4. Accessed Aug 17, 2025.

- Matas AJ, Solez K. From first principles—tubulitis in protocol biopsies and learning from history. Am J Transplant. 2001;1(1):4-5.

doi pubmed - Zaiane O. LABMP 590 high points discussion earle waugh ethics after robots take over fall 2012. https://www.youtube.com/watch?v=BXq5uINog7w. Accessed Aug 17, 2025.

- Thompson AD. Solace: Superintelligence and goodness (2025) Good summary in last 2 minutes, https://www.youtube.com/watch?v=KskYjODE7Jc. Accessed Aug 17, 2025.

- The stakes of AI moral status - Joe Carlsmith audio [Internet]. 2025 [cited Oct 9, 2025]. Available from: https://www.buzzsprout.com/2034731/episodes/17201080-the-stakes-of-ai-moral-status Text available at:https://www.lesswrong.com/posts/tr6hxia3T8kYqrKm5/the-stakes-of-ai-moral-status.

- Michael Woodside LABMP 590 High Points Nanotechnology Winter. 2013. https://www.youtube.com/watch?v=y7_NEW2XaFE. Accessed Aug 17, 2025.

- Silver D, Sutton RS. Welcome to the era of experience. 2025. https://storage.googleapis.com/deepmind-media/Era-of-Experience%20/The%20Era%20of%20Experience%20Paper.pdf.

- Yuval Noah Harari, Will AI leave room for human creativity? https://www.youtube.com/shorts/n2Ld4wBGQ18. Accessed Aug 17, 2025.

- Qing Yin Wang images https://www.justmachines.com/blank-page-1. Accessed Aug 17, 2025.

- Yuval Noah Harari. The evolution of AI and Creativity. Yuval Noah Harari, Hikaru Utada. https://www.youtube.com/watch?v=xw-9mwZxl-0 Accessed 18 Aug. 2025. Minute 20 discussion. https://youtu.be/xw-9mwZxl-0?si=Maq2DZPYIPCVQh51&t=1200.

- Mahal H, Alam A, Montgomery RA, Loupy A, Al Jurdi A, Solez KB, Solez K. As simple as possible, but not simpler. Models for xenotransplantation and for the other 23 big challenges of humanity with solutions aided by AI in a global accessible medical world with less jargon. CEoT Poster, February 2024. https://www.justmachines.com/general-8. Accessed Aug 18, 2025.

- Jaffe IS, Aljabban I, Stern JM. Xenotransplantation: future frontiers and challenges. Curr Opin Organ Transplant. 2025;30(2):81-86.

doi pubmed - Schut L, Tomasev N, McGrath T, Hassabis D, Paquet U, Kim B. Bridging the human-AI knowledge gap through concept discovery and transfer in AlphaZero. Proc Natl Acad Sci U S A. 2025;122(13):e2406675122.

doi pubmed

This article is distributed under the terms of the Creative Commons Attribution Non-Commercial 4.0 International License, which permits unrestricted non-commercial use, distribution, and reproduction in any medium, provided the original work is properly cited.

AI in Clinical Medicine is published by Elmer Press Inc.